How Can We Decide Which Variable Selection We Will Use

Particularly cautious interpretation of results. Then they determine whether the observed data fall outside of the range of values.

Machine Learning Life Cycle Machine Learning Life Cycles Data Science

We could use the individual p -values and refit the model with only significant terms.

. Statistical tests can be used to select those features that have the strongest relationship with the output variable. 052237162 026335492 058125401 056561105 we can conclude that feature 1 3 and 4 or Var 1 3 and 4 in the biplot are the most important. Correlation is a statistical term which in common usage refers to how close two variables are to having a linear relationship with each other.

Even if this distinction can be a little bit confusing since we use for both objectives the same criterion related to prediction performance the idea is the following. Or in other words how much variance in a continuous dependent variable is explained by a set of predictors. The first is a matrix or data frame of the explanatory variables the second is the threshold value to use for retaining variables and the third is a logical argument indicating if text output is.

Feature selection is the key influence factor for building accurate machine learning modelsLets say for any given dataset the machine learning model learns the mapping between the input features and the target variable. Here we have a lift force F speed of the aircraft V sound speed of air c aircraft wing area A and freestream density rho. Dependent variable constant parameter IV.

The new p - 1-variable model is t and the variable with the largest p-value is removed. Selection Process for Multiple Regression. 312 Decide whether variable selection should be applied which IVs are considered and which variable selection method is employed.

So for a new dataset where the target is unknown the model can accurately predict. This is also clearly visible from the biplot thats why we often use this plot to summarize the information in a visual. Using the study and the data we introduce four methods for variable selection.

Parameter IV The form is linear in the parameters because all terms are either the constant or a parameter multiplied by an independent variable IV. Remove the variable with the largest p-value that is the variable that is the least statistically significant. 1 all possible subsets best subsets analysis 2 backward elimination 3 forward selection and 4 Stepwise selectionregression.

If variable selection is applied it should be accompanied by stability. Estimate the difference between two or more groups. With modern computing power it is feasible to fit all 210 1024 possible combinations of explanatory variables and choose the best model by one of a number of possible criteria eg AIC BIC or predictive power for example ability to predict the values of a test subset of the data that you have separated from the set you use to fit your model.

In practice you can make use of a simple rule the disjunctive cause criterion which states that. 1 List and count the n variables in the problem. Determine whether a predictor variable has a statistically significant relationship with an outcome variable.

We discuss how five com-mon misconceptions often lead to inappropriate application of variable selection. So feature selection plays a huge role in building a machine learning model. A linear regression equation simply sums the terms.

This gives us n 5 variables. They can be used to. Statistical tests assume a null hypothesis of no relationship or no difference between groups.

We emphasize that variable selection and all problems related with it can often be avoided by the use of expert knowledge. The first objective called. Variable Selection in Multiple Regression.

On this problem there is a trade-off of features to test set accuracy and we could decide to take a less complex model fewer attributes such as n4 and accept a modest decrease in estimated accuracy from 7795 down to 7638. How to choose variables based on causal understanding. In this article we will explore two measures that we can use on the data to select the right features.

Popular Feature Selection Methods in Machine Learning. You should control for variables that either cause the exposure or the outcome or both. We can see that the performance of the model generally decreases with the number of selected features.

We advise not to consider variable selection on strong IVs and to subject IVs with unclear role to variable selection only with a sufficient sample size. The scikit-learn library provides the SelectKBest class that can be used with a suite of different statistical tests to select a specific number of features. Continue until a stopping rule is reached.

The basis of a multiple linear regression is to assess whether one continuous dependent variable can be predicted from a set of independent or predictor variables. The function uses three arguments. Start with all variables in the model.

A natural next question to ask is which predictors among a larger set of all potential predictors are important. Is pleased to bring you Variable Selection an online instructor-led live short courseComplete information about the course can be found by following the links below. In VBA we can select any range of cells or a group of cells and perform different set of operations on them selection is a range object so we use range method to select the cells as it identifies the cells and the code to select the cells is Select command the syntax to use for selection is rangeA1B2select.

Thus by looking at the PC1 First Principal Component which is the first row. While the model must be linear in the parameters you can raise an independent variable by an exponent to fit a curve. Variable Selection October 8 2020 The recorded version of this course is now available in our EigenU Recorded Courses area.

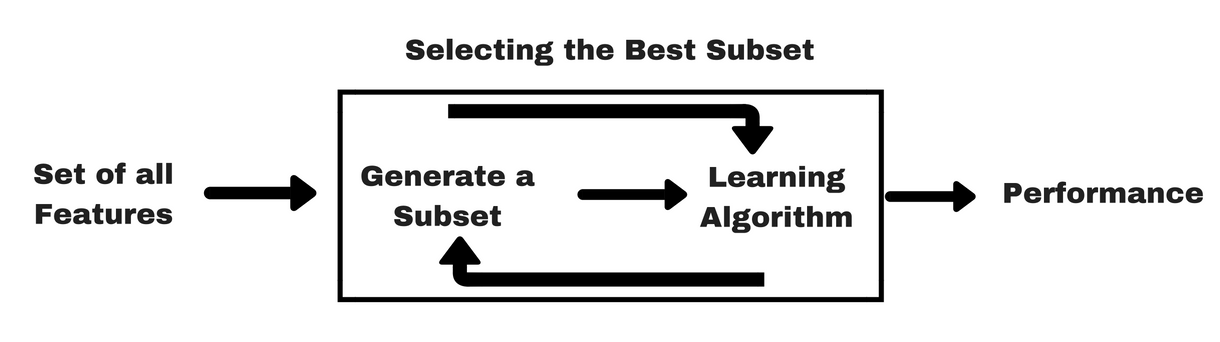

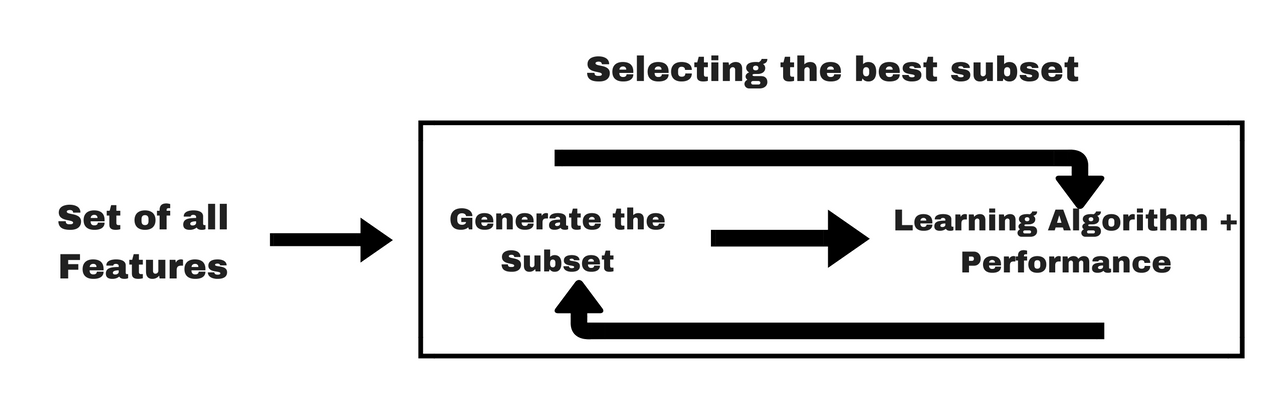

Fs SelectKBestscore_funcchi2 kall fsfitX_train y_train X_train_fs fstransformX_train X_test_fs fstransformX_test We can then print the scores for each variable largest is better and plot the scores for each variable as a bar graph to get an idea of how many features we should select. You dont need to determine the causal relationship between ALL variables in your model. InGenuer et al2010b we distinguished two variable selection objectives referred to as interpretation and prediction.

When we fit a multiple regression model we use the p -value in the ANOVA table to determine whether the model as a whole is significant. 2 List the dimensions of each variable according to their fundamental dimensions.

Feature Selection Methods Machine Learning

Comments

Post a Comment